- AI Weekly Wrap-Up

- Posts

- New Post 1-22-2025

New Post 1-22-2025

Top Story

Trump announces $500 billion “Stargate” data center project, and scraps AI safety order

Elections have consequences. Newly-inaugurated President Trump lost no time in setting his own stamp on the AI landscape. First, he voided outgoing President Biden’s major executive order on AI safety, which required AI model makers to make their latest models available for government testing, opting instead for a lighter regulatory touch, and relying on AI companies to self-regulate. Second, he orchestrated a joint announcement of a massive new AI infrastructure effort, with tech titans pledging to plow $500 billion into building massive new AI data centers all over the US.

OpenAI CEO Sam Altman at the Stargate announcement with Trump and the CEOs of Softbank and Oracle.

Clash of the Titans

China’s DeepSeek shows once again that the US is only slightly ahead in AI - if at all

Last month one of the big stories in AI was that a Chinese AI company known as DeepSeek had released an open-source model that equaled the performance of the leading models from OpenAI, Google, and Facebook/Meta, but was smaller, more efficient, and was much, much cheaper to train.

Also last month, OpenAI and Google released even more powerful models that use step-by-step reasoning to improve performance without needing larger datasets to train on. So, advantage US, yes?

Not so fast. This month DeepSeek released another open-source model, known as R1, that equaled the performance of the US “reasoning” models. And again, this model was smaller, more efficient, and way, way cheaper to run.

It appears that China’s main reaction to the stringent export controls on advanced AI chips imposed by the US government is to innovate the heck out of AI architecture, so as to erase any US advantage.

China’s DeepSeek AI model equals or beats OpenAI models on a variety of benchmarks.

AI speeds up the Pentagon’s “kill chain”, while defense-tech Anduril builds a war drone mega-factory

The US Department of Defense’s chief digital and AI officer, Dr. Radha Plumb, was quoted recently as saying that AI is speeding up the Pentagon’s “kill chain”, the process of identifying, tracking, and eliminating threats using sensors, software, and weapons. Over the last 2 years, AI-enabled defense technology companies have garnered billions in government contracts, becoming one of the fastest-growing uses of AI.

One beneficiary of the defense technology boom has been Anduril, a company co-founded by Palmer Lucky, who sold a virtual-reality headset company he started in his college dorm room to Facebook for $2 billion at age 22. Anduril specializes in autonomous drones for military applications, and last week it announced that it will build a billion-dollar “hyperscale” (i.e. humongous) factory in Columbus, Ohio to mass produce war drones.

Billionaire boy genius Palmer Luckey with one of Anduril’s autonomous war drones.

Fun News

World Bank study: 6 weeks of AI tutoring produces 2 years of learning

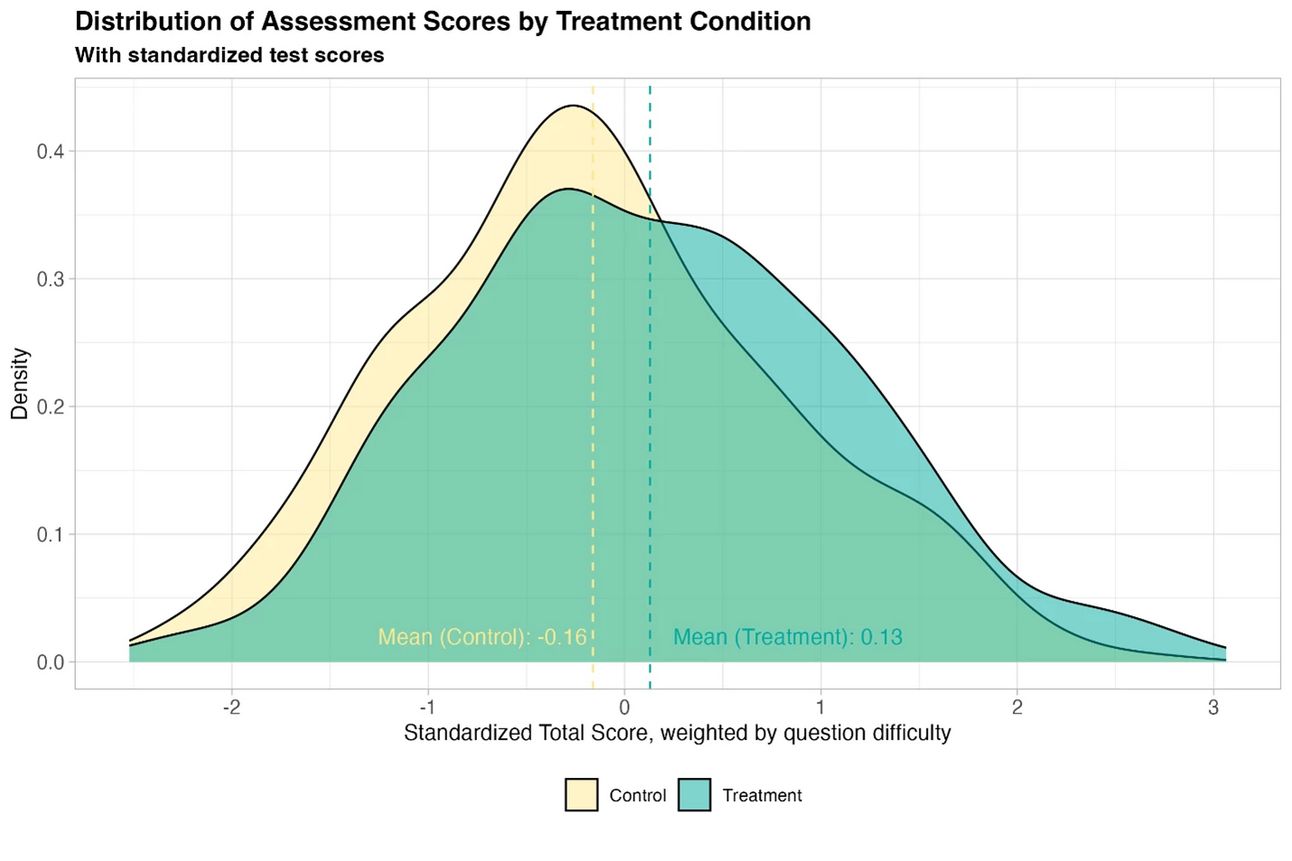

The venerable World Bank has just released the results of a randomized controlled trial of using an AI chatbot (GPT-4) as a tutor for students learning English in Nigeria. In only 6 weeks the students’ performance improved the equivalent of 2 years of normal progress. When compared to a database of educational intervention studies, this pilot program outperformed 80% of them. In addition, the AI tutor seemed to benefit weaker students even more than stronger students, allowing the weaker students to narrow the performance gap with their stronger peers.

6 weeks of AI tutoring increased students’ performance as much as 2 years of normal progress.

Colossal Biosciences raises $200 million to de-extinct the mammoth

Colossal Biosciences is a $10-billion research and development juggernaut on a mission to bring back 3 iconic animals from extinction - the wooly mammoth, the Tasmanian tiger, and the dodo. Founded by legendary genetics researcher George Church of Harvard and MIT, and serial entrepreneur Ben Lamm, the company employs 170 scientists in 4 different laboratories, and has research partnerships with 17 academic labs worldwide. The company’s plan to bring back the mammoth involves mapping its entire DNA, finding the differences between the mammoth genome and that of its nearest living relative, the Asian elephant, then using the CRISPR gene-editing tool to graft the mammoth-specific genes into the DNA of cells from the Asian elephant. These cells are fused with egg cells from an Asian elephant and then implanted into a female Asian elephant’s womb for gestation. All of these steps are enabled by AI, computational biology, and synthetic biology. It’s a Manhattan Project or moonshot-scale endeavor, all financed by private investors captivated by its ambitious goal and cutting-edge science.

Colossal Bioscience wants to bring back the wooly mammoth.

Pew Research finds that 26% of US teens use ChatGPT for schoolwork

A report last week from the Pew Research Center found that 26% of US teens (age 13 to 17) are using ChatGPT for schoolwork, which is double the share in 2023. Interestingly, a larger share of Black and Hispanic students used the chatbot (31% each), perhaps because they have fewer other options for schoolwork help than white students.

Usage of ChatGPT for schoolwork has doubled, to 26% of US teens.

2025 may be the Year of the Robot

If 2023 was the year of the AI chatbot revolution, 2025 may prove to be the breakout year for robots. Progress in robotics is proceeding at an extremely rapid rate, with companies chasing a potential worldwide demand that could top a trillion dollars annually. Two recent models from China show the current state of the art. First, Unitree’s G1 humanoid robot has gotten a software upgrade that makes its gait much more fluid and agile. That is, less, um, “robotic”. Click here to see it in action.

Another Chinese robotics company, Mirror Me, eschews the trendy humanoid form for the utilitarian design of a robot dog - that can run 100 meters in 10 seconds. (For comparison, Usain Bolt’s world record for the 100 meter dash is 9.58 seconds.) Doglike robots are increasingly being designed for use as pack animals in warfare, as well as various industrial applications. Click here to see it in action.

Unitree’s agile G1 humanoid robot no longer walks like a robot.

Mirror Me’s Black Panther II robot dog can run almost as fast as Usain Bolt.

AI in Medicine

OpenAI’s new GPT-4b AI model designs rejuvenation proteins

AI powerhouse OpenAI has just announced that it has developed an AI model that designs proteins that can transform mature cells back into stem cells. Stem cells are the immature, undifferentiated cells that can develop into a variety of adult, specialized cells. For years, there has been great excitement about the potential for using stem cells for curing multiple diseases, or even regrowing damaged organs. Stem cells today are mostly harvested from a patient’s blood, or from umbilical cord blood or placental tissue after a birth. Recent research has focused on reprogramming adult cells back into stem cells. OpenAI has announced GPT-4b, a new biomedical AI model that has already designed proteins that are 50 times more active in reprogramming mature cells to stem cells than those in current scientific use, which are derived from natural signaling proteins. This work was performed in collaboration with Retro Biosciences, a startup focused on life extension which has been funded by OpenAI’s CEO Sam Altman with $180 million of his own money. The hope is that learning how to rejuvenate cells will lead to breakthroughs in how to extend the human lifespan.

OpenAI CEO Sam Altman is betting big on AI-enabled life extension.

Stanford AI drafts messages to patients explaining lab results

Physicians (on average) are notoriously bad at explaining medical results to patients, and multiple studies have shown that patients generally prefer explanations from AI chatbots, which are polite, thorough, and couched in simple and understandable terms. The problem is made worse by today’s open chart laws, which require sharing medical information with the patient as soon as it is finalized. This gives the patient important but complex information which they are not trained to understand. Now Stanford is developing an AI chatbot that will draft an explanation for each patient’s lab results, which the physician can review, edit, and send. If successful, this will lift an administrative burden from the physicians, while making sure that the patient gets timely, accurate, and understandable information about their results.

Lab results can be confusing for patients. AI can help.

AI designs protein antidotes to lethal snake venoms

Snakebites kill 100,000 people worldwide each year. But the current method for developing anti-toxins is hideously slow, expensive, and dangerous. It involves milking a snake for its venom (which has been likened to handling a live hand grenade), injecting it into horses, then harvesting the horse antibodies weeks later. Now Danish scientist Timothy Jenkins has teamed with 2024 Nobel-prizewinner David Baker to use AI to design blocking proteins for cobra toxin, which grab onto the part of the toxin that helps it attach to cells. In an experiment just published in Nature, Jenkins and Baker injected mice with the cobra toxin, and then the AI-designed blocking protein either immediately or 15 minutes later. Every mouse survived. This is just a first step toward proving that the method is effective, safe, and practical in humans, but this approach clearly holds great promise for neutralizing a wide range of life-threatening toxins.

A Mozambique spitting cobra may have met its match with AI-designed anti-toxins.

One doctor’s vison of an AI-centric medical future

As we have previously reported, today’s most advanced AI models are outperforming physicians at diagnosing complicated cases, as well as at explaining medical results to patients in understandable language. Tellingly, AI chatbot answers to patient questions are routinely rated as higher in empathy than the answers of actual physicians (because they are polite and explain things thoroughly in simple terms.) One physician posted on Reddit, the online discussion site, a vision of a future in which a Nurse Practitioner or Physican Assistant takes the patient’s description of their symptoms and the available medical data, then feeds it to the AI for diagnoses and treatment plans. The human professional then further explains the AI’s recommendations and tends to the emotional issues involved. One onsite physician can supervise multiple NPs and PAs. The key quote: “Compared to other jobs, being a doctor is mostly just running algorithms, something an AI can do faster and better.” The lesson for lots of professionals may be: in an age of ubiquitous and cheap intelligence, empathy and communication skills may become more important than expertise.

That's a wrap! More news next week.