- AI Weekly Wrap-Up

- Posts

- New Post 1-7-2026

New Post 1-7-2026

Top Story

2025: The Year in Review, and The Year Ahead

In this issue, we take stock of the year just past, and make predictions about what’s in store for 2026. 2025 was a tumultuous year, with staggering advances in AI model capabilities, the meteoric rise of China as a global AI powerhouse and the acknowledged leader in open source, and jaw-dropping investments in data centers. Meanwhile, AI projects in large companies delivered mostly disappointing results, entry level jobs in software engineering dried up, and deepfakes became ever harder to distinguish from reality.

2026 promises to bring yet another new paradigm to the fore - AI Agents, who can semi-autonomously perform tasks that would otherwise require a human being. More countries will work to control their own AI and data, more companies will want small, capable models that can run locally on their own hardware, and more people will want the privacy and control that come with having a personal AI on their phone or laptop.

Given all the progress that has come before, and all that potentially lies ahead, it is a good time to take stock, and ask the question: “What sort of future do we want AI to usher in? And how do we get there?”

2025: Major Trends

The Year of Reasoning

In 2024, AI companies made their models smarter by making them bigger, and by training them with more data. In 2025, model makers increased performance by making their AI models plan and check their work. Really. I am not kidding here, and I am not making this up. It turns out that some of the brightest AI scientists on the planet had to “discover” that thinking goes better when you don’t just say the first thing that pops into your head, but you first analyze the question, then plan a way to answer it step by step, and finally check your work to make sure that you didn’t skip a step or misremember some fact. Something that any parent who has ever helped their kid with homework could have told them.

Infrastructure and Energy

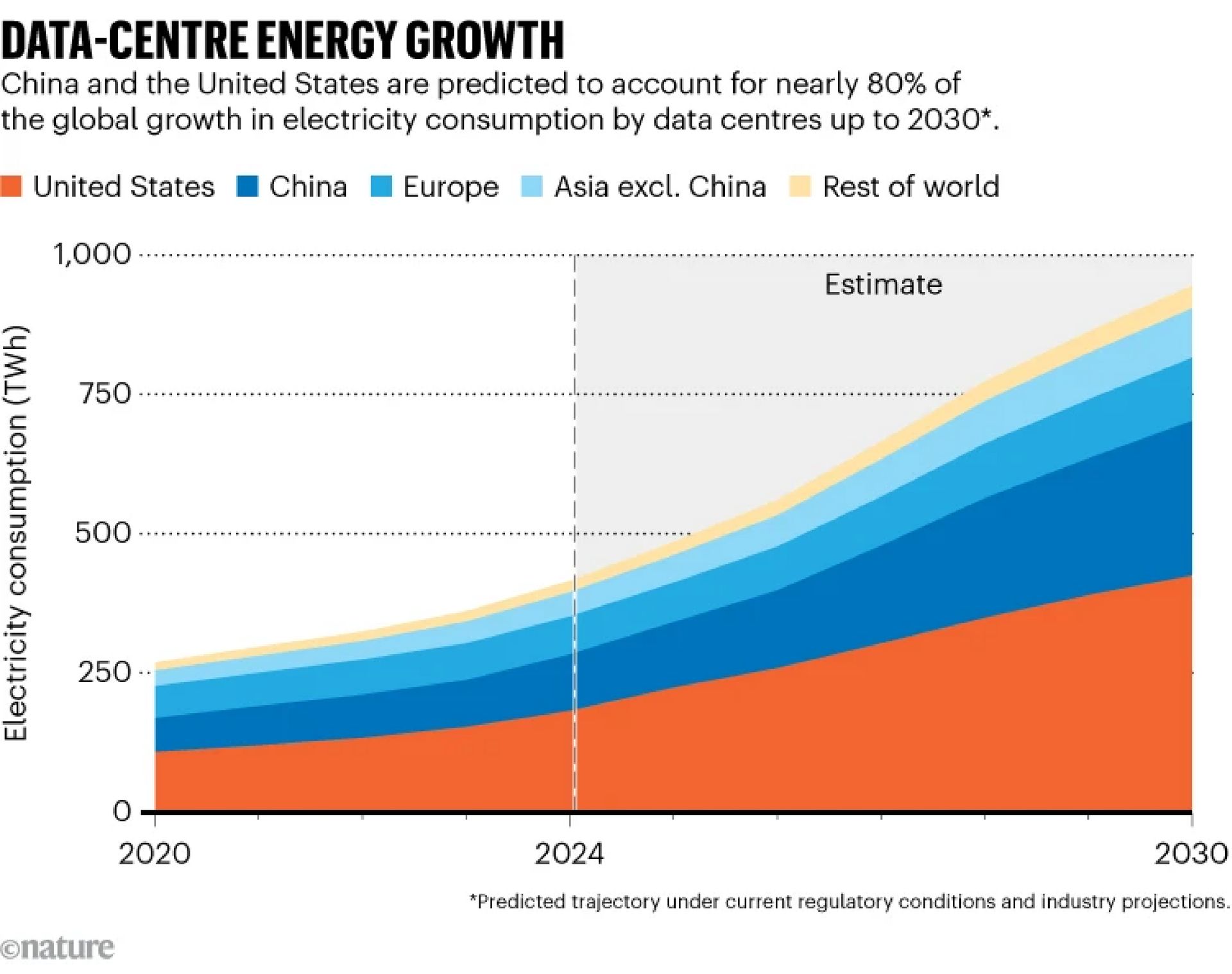

Big Tech firms are betting heavily that the AI boom is real, and they have plowed literally hundreds of billions of dollars into building data centers just this year. The investments in building data centers is so large, that it is propping up the entire US economy. Calculations indicate that US GDP would be stagnant or shrinking into recession if not for the massive spending on AI data centers.

Data centers need more than buildings and chips to operate. They needs lots of electricity and water. Both resources are limited in the short term, and one of the stories of 2025 that will continue to be a story in 2026 is how data centers are driving up electricity rates and tightening water supplies.

China’s rise to AI global powerhouse

Both Biden and Trump thought that they could hobble China’s AI progress by banning the export of our most capable computer chips. China said: “Think again!” China has shrugged off the chip ban and leapt into contention as the second most advanced AI power in the world through a variety of strategies. First, of course, they evaded the ban the old fashioned way: they smuggled in banned chips, or rented use of them from data centers in other countries.

More significantly, they set a few of their millions of world-class engineers on the problem, and began developing their own superchips. They tasked a few more of their millions of world-class engineers on developing more efficient architectures for AI models, and shocked the world with DeepSeek, a much smaller model than those from OpenAI, Google, and Anthropic, but with near-equal performance.

And for the final twist, China’s AI model makers are open-sourcing their smaller, more efficient models, so that anybody can run them on their own hardware for free. This last chess move gains lots of good will from around the world, while savagely undercutting the prices that the Western, investment-backed model makers can charge, weakening their grip on the market.

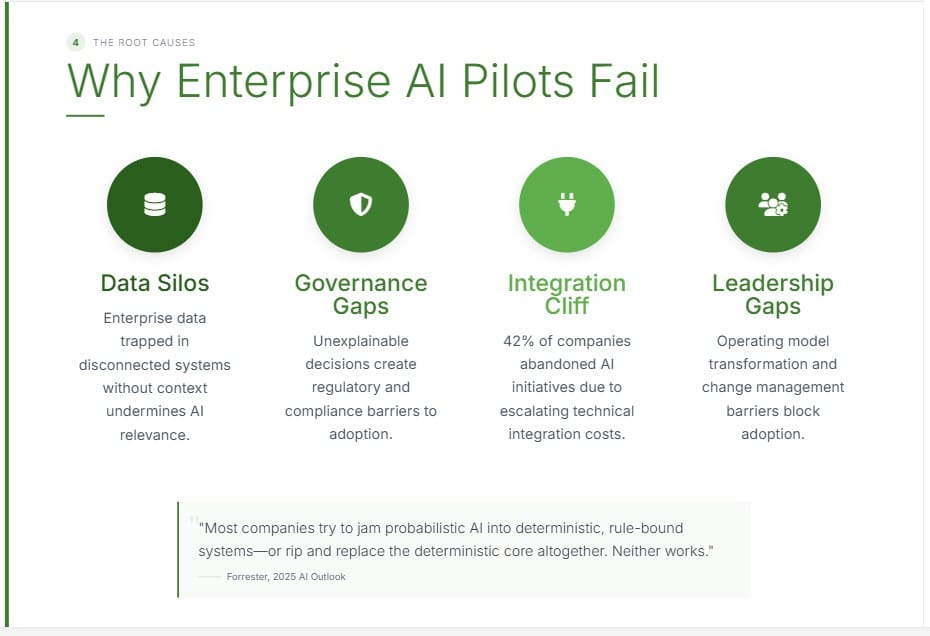

Pilot Purgatory in the Enterprise

2025 was the year that most large businesses started implementing AI projects. Most failed. One widely cited paper from MIT estimated that 95% of enterprise AI projects failed to demonstrate a positive return on investment within six months. The reasons for the failures included unclear goals, unclear leadership, messy data, and a fundamental; misunderstanding of how to make AI a reliable cog in the corporate machine. We are now seeing a new wave of implementation of AI in large companies, using new approaches. These include more bottom-up experimentation at the departmental or even individual level, making AI more an assistant than a replacement (human-in-the loop), and using AI only for the tasks it is good at (creating text, summarizing) while using computer code to perform the steps that need absolute reliability.

Vibe Coding

AI models are very good at translating text from one language to another, and this includes translating English requests into computer code. Both OpenAI and Anthropic have prioritized computer coding ability in their models, and consequently there is now a burgeoning industry of AI coding assistant apps. The result is called “vibe coding” - giving the AI coding assistant an English-language prompt, so that the AI “gets the vibe,” and then proceeds to produce the computer code semi-autonomously. AI coding app Cursor has grown to over 1 million users and $1 billion in annual revenue in just 2 years; it recently raised $2.3 billion in venture backing, at a valuation of $29.3 billion for the company.

Computer software is rapidly being transformed from a hand-crafted product to a mostly machine-produced artifact, with (for now) lots of human input in the planning, verifying, and quality control aspects. The job of senior software developers is transitioning to supervising AI coding agents. This means each senior software developer is far more productive. It also means that the demand for entry level software engineers has dropped off a cliff. (See next story.)

The Death of Entry Level Jobs

The much-feared AI job apocalypse, in which AI takes away almost everyone’s job, has not yet happened. But what has occurred is a notable slowdown of hiring for entry level jobs in multiple industries, in particular software engineering. Where once college graduates with computer science degrees were routinely offered six-figure starting salaries, now even graduates of elite schools such as Stanford are having trouble finding a job in their field.

The reason is vibe-coding (see previous story). One senior software developer can now be so much more productive using AI coding agents, that the need for entry level junior developers has all but dried up. One can imagine that the same process could occur in other industries. In law firms, for example, one could envision that partners could get rid of all junior associates and have the legal research and preliminary drafting done by AI agents and paralegals - or maybe by AI agents alone. The one-person law firm might make a resurgence.

How a profession survives beyond a single generation if there are no new entrants is an issue that no one as yet has an answer for.

Deepfakes

AI’s ability to generate realistic images, videos, and voices, known as deepfakes, has attained a level wherein it is increasingly difficult to tell what is real. This is empowering bad actors, who are using deepfakes for fraud and for political disinformation. Individuals are being defrauded by scammers who make phone calls using voices of family members that have been cloned from social media posts, making urgent pleas for bail money after an imaginary auto accident. Russian bot farms post compromising or inflammatory images online to discredit political foes and boost internal division in the US population.

To date, efforts to counter these bad actors have been scattered and grossly insufficient. A foolproof way to mark an image as having been created by AI would help enormously, but no method proposed so far for “watermarking” AI images has survived the ability of AI to quickly and cheaply remove those watermarks.

Predictions for 2026

From Reasoning to Agents

If 2025 was the Year of Reasoning, 2026 is shaping up to the Year of Agents. Until now, AI models have mostly just talked or produced images. A number of trends are converging to make AI models capable of performing actions semi-autonomously - in other words, to act as agents to perform tasks for us. Such tasks could include shopping, buying, negotiating terms, and more. Experts are predicting a fast-burgeoning role for these agents. On the low end, elite consulting firm McKinsey foresees that AI agents will be performing $3 trillion to $5 trillion in online transactions by 2030. The much more optimistic Gartner Group projects $15 trillion of agent-led transactions by 2028.

AI Sovereignty

Currently, the most advanced AI models in the world are owned by just 3 large American companies (OpenAI, Google, and Anthropic), and the only way to get access to these models is to rent them on the cloud. Lots of people are unhappy about this. For one, many nations are wary of having their AI served up to them from a foreign country, which may have different values, a different language, and certainly has some different interests. As of now, The European Union, India, and the United Arab Emirates have made significant moves to encourage home-grown AI models and local infrastructure. And of course China is charting its own path in AI, and is widely acknowledged as the second global AI superpower.

Local Intelligence

In a parallel development, companies and individuals are also making moves to break their dependency on AI model makers and their associated cloud infrastructure. A number of companies are experimenting with running smaller, but still highly capable models on-premise on their own hardware. This gives the company more control, fewer security issues, and can be cheaper than using large models from a major AI model maker (although savage price competition among the Big 3 has kept costs surprisingly low.)

Individuals are also experimenting with using AI models on their devices (laptop, phone, and/or watch.) The major reason is data privacy. There is a concern that as AI gets to know more and more about you and your preferences, the possibility of manipulation of you by the AI vendor goes up exponentially - potentially changing your purchases, your news consumption, and even your vote. This becomes even more possible in a world where you are no longer interacting online directly, but are having agents do it for you. Any company that could actually guarantee individuals a secure agent that would act only for your interests would have no trouble attracting customers.

Building the Future We Want

The power of AI can be used in myriads of ways - to further concentrate wealth and power, and build a surveillance state where no one is safe; or to make a fairer, more equitable world that promotes freedom, dignity, and human flourishing. AI will likely bring unimaginable abundance - the question is - how widely will it be shared?

The future is being shaped as you read this. If you care about any of the issues touched on in this edition of the newsletter, it may be time to think about lending your voice and your vote to the future you hope for.

That's a wrap! More news next week.