- AI Weekly Wrap-Up

- Posts

- New Post 2-12-2025

New Post 2-12-2025

Top Story

Paris AI Summit blows up, US and UK both refuse to sign joint declaration

This week’s AI Summit in Paris has broken up in acrimony. VP J.D. Vance came in as a spoiler, calling the EU’s red tape a barrier to innovation, and vowing that the US will dominate AI. In the end, neither the US nor the UK signed the summit’s Statement on Inclusive and Sustainable AI, which was nevertheless approved by all 60 of the other nations in attendance. The EU followed up this disavowal of US leadership by proposing a plan to direct 200 billion Euros toward AI and AI infrastructure. France’s Premier Emmanuel Macron has proposed a 109 billion Euro plan for investment in AI in France alone. The AI gold rush is on, and competition, even among erstwhile allies, is heating up.

VP J.D. Vance made no friends at the Paris AI Summit yesterday.

Clash of the Titans

Elon floats an offer to buy OpenAI for $97 billion, Sam says no dice

Chaos agent Elon Musk sprang another ploy to try to block OpenAI from spinning off a for-profit arm - he has floated a hostile bid to buy the AI assets of the parent not-for-profit OpenAI Foundation for $97 billion. This is clever, and nasty - and so it’s vintage Musk. The not-for-profit will have to consider any bona fide buyout offer in order to get maximum funds for its charitable mission, and due diligence from Musk can tie OpenAI up in knots for months, all while Musk purloins all of OpenAI’s secret sauce. OpenAI CEO Sam Altman’s reply was serene, and subtly nasty in return - he said that he wished Elon would stop the shenanigans and just compete by building a better product, and that Elon was clearly not a happy person - “I feel for the guy.” Expect more fireworks. Musk is feeling invincible as the nation’s Deputy President, and Sam is playing for all the AI marbles.

Elon at his other part-time job as Deputy President.

AI is getting smarter faster, insiders expect human-level AI by 2027

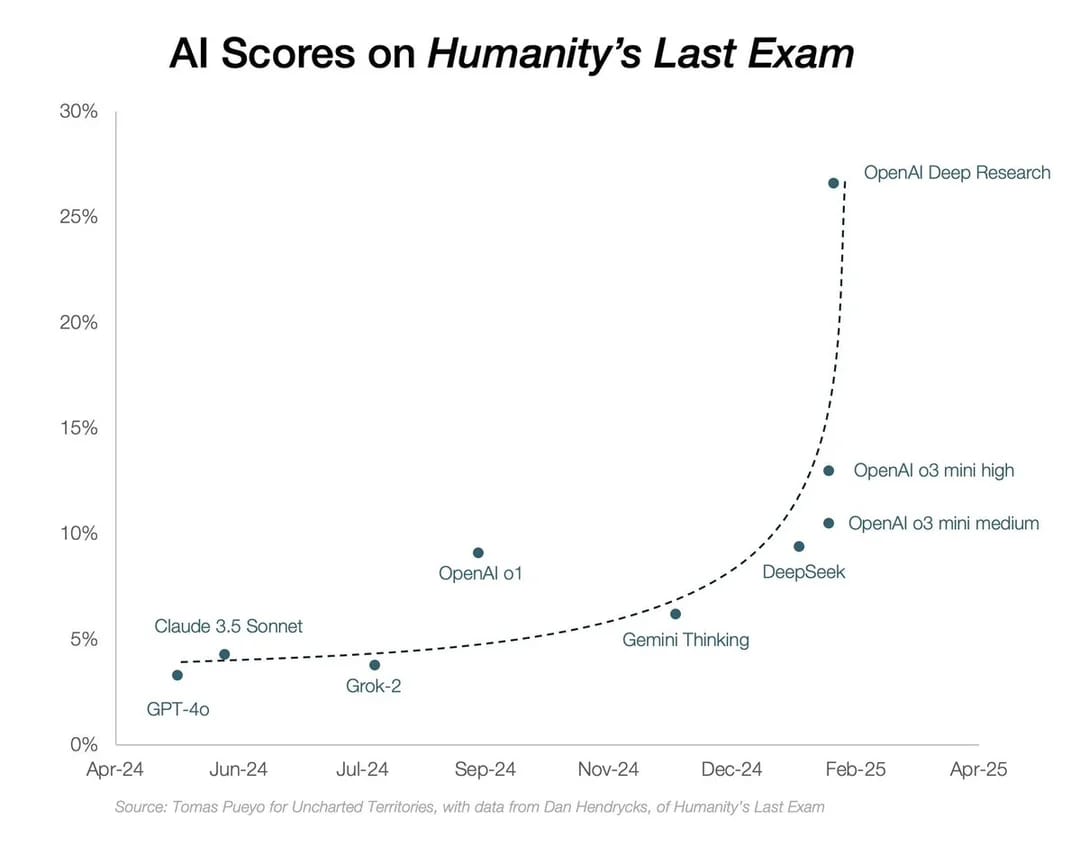

AI seems to be hitting another inflection point, where its capabilities are improving faster and faster. Just 2 months ago OpenAI released its paradigm-shifting “reasoning” model, o1, which can solve more complicated logical puzzles and make plans step by step. Competitors such as Google and copycats like DeepSeek soon replicated that feat. Then 2 weeks ago OpenAI released an even better reasoning model, known as o3, and 10 days ago it released Deep Research, a semi-autonomous AI research assistant for a pricy $200 per month. Early reviews of Deep Research are wildly positive - Wharton professor Ethan Mollick reports that he is for the first time getting unsolicited comments from senior managers at large companies that an AI product will change how they perform their jobs. Deep Research has scored an unprecedented 26.6% on the benchmark known as Humanity’s Last Exam, a compendium of 3,000 of the hardest questions drawn from a wide range of fields. At the same time, OpenAI’s models have been rising in the ranks of a competition among computer programmers - OpenAI CEO Sam Altman has said that the company has an internal AI model that is the 50th best programmer in the world. Both Altman and Anthropic CEO Dario Amodei seem to exude a serene swagger these days - they have both predicted human-level performance of AI in the next 2-3 years, and they act as though they have been to the mountaintop and seen the future.

Fun News

Stanford/UW researchers match OpenAI model for $50 in 26 minutes

A team from Stanford and the University of Washington were able to train an advanced “reasoning” AI model equivalent in performance to OpenAI’s recently released o1 model using less than a half-hour of computing time at a cost of less than $50. The team started with a small open-source AI model known as Qwen, which was developed by Chinese e-commerce giant Alibaba. They then posed 1,000 carefully curated math and reasoning questions to a large leading-edge reasoning AI model, Google’s Gemini 2,0 Flash Thinking Experimental. The small Qwen model was then trained on the questions, answers, and chain of reasoning of the large Google model. This is a process known as “distilling,” in which a small model can be trained to perform like a much larger and more powerful model. The training set was small enough that it only took 26 minutes to process, at a cost for use of the computer time of less than $50. China’s viral sensation DeepSeek AI model was trained using a similar process. This is the dilemma of the large AI vendors - what they spend billions to create, can be replicated for the cost of lunch at the local deli.

Study shows that only 0.1% of people can reliably detect AI deepfakes

Biometric identity verification vendor iProov released a study of 2,000 consumers in the US and UK who were asked to distinguish real images and videos from AI-generated deepfakes. They called the results “alarming” - only 0.1% of participants could correctly categorize all images and videos as either real or fake, in a setting in which they have been primed to look for deepfake material. iProov hypothesizes that in real world settings, the ability of consumers to spot the fakes would be even worse. They found that older participants were more vulnerable to falling for deepfakes, and that all consumers found deepfake video harder to detect than still images.

Deepfake technology can swap Tom Cruise’s face onto your tennis instructor.

Anthropic analyzes AI penetration in various occupations

AI startup Anthropic analyzed 4 million user interactions with its chatbot Claude, in order to understand what occupations are using AI, and for what. They found that many occupations show some usage of AI (36% of occupations used AI in at least 25% of their tasks), but that few occupations used AI extensively (only 4% of occupations used AI in at least 75% of their tasks.) Leading the pack in AI usage were computer programmers, people who write or design for a living, and scientists.

BBC finds chatbots often fail to accurately summarize news

BBC, the venerable UK public service broadcaster known worldwide for the quality of its news coverage, put 4 AI chatbots to the test. BBC had OpenAI’s ChatGPT, Microsoft’s CoPilot, Google’s Gemini, and AI search startup Perplexity each summarize the same 100 news articles from the BBC’s coverage in December. The summaries were rated by BBC journalists who were experts in the topics, on criteria such as accuracy and impartiality. They found that 51% of the responses were judged to have one or more significant issues. Within that 51%, a subset of 19% contained factual errors. BBC finds these statistics troubling. Of course, another way to look at these numbers is that a technology that was invented barely 2 years ago is already 81% accurate at a fairly high-level human skill, and that even the BBC, who as legacy media is highly motivated to find flaws in potential news competitors, could find no flaw in approximately half of the summaries by AI chatbots. Glass half empty, or half full?

The BBC put 4 AI chatbots to the test as news summarizers, and found them flawed.

Robots

MIT builds swarms of tiny insect drones that fly 100x farther

MIT scientists are working on designing robotic insects that can act as mechanical pollinators of crop plants, in order to increase crop yields. Previous prototypes were too large and unwieldy to be practical, but MIT’s latest design has miniaturized the drone while increasing its range 100-fold. As yet there has been no word on how the bees are taking all this.

MIT’s insect drone is designed to pollinate crops, now flies 100 times farther than previous designs.

Robotics startup Figure ditches OpenAI for its own AI model

High-flying humanoid robot startup Figure decided last week to sever its collaboration with OpenAI in order to focus on its own in-house model, which it touts as a “major breakthrough.” The rupture was all the more surprising because OpenAI has been a longtime investor in Figure. However, recently OpenAI has been building its own in-house robotics development group, and Figure’s hard-charging CEO Brett Adcock may have decided that continuing to rely on OpenAI was too much like allowing the fox in the henhouse. Say what you will about OpenAI CEO Sam Altman (and I do), no small part of his success stems from his propensity to ignore boundaries when that is to his advantage, so Adcock has ample reason to worry.

Robotics startup Figure’s current Figure 02 humanoid robots are making BMWs in Spartanburg, SC.

AI in Medicine

JAMA: Study shows people prefer AI to physicians for online questions

Stanford researchers surveyed patients on their satisfaction with answers to their questions posed on patient portals, and compared their satisfaction with answers generated by AI to those written by physicians. Independent clinicians rated the answers for clinical accuracy. The study found that the AI answers were as accurate as the physician answers, and patients rated the AI answers as more complete, more understandable, and more empathic.

Study co-author and Director of Stanford’s Center for Digital Health Eleni Linos MD DrPH

AI tool finds lifesaving medication for rare disease

Researchers at UPenn have used an AI model to save the life a patient who suffers from a rare condition known as idiopathic multicentric Castleman’s disease (iMCD). iMCD is a life-threatening lymphoproliferative disorder characterized by severe systemic inflammation and swollen lymph nodes, which can lead to multiorgan failure and death. Researchers developed an AI model to assess existing FDA-approved medications for the likelihood that they would be helpful in this disease, and found that adalimumab, a monoclonal antibody approved to treat a variety of inflammatory conditions from arthritis to Crohn’s Disease, was the top-ranked candidate. It turns out that adalimumab blocks an inflammatory protein known as Tumor Necrosis Factor, or TNF. Levels of TNF are extremely high in iMCD, and this may be a major driver of its destructive effects on the body. A patient with iMCD was entering hospice care, with little hope for survival, but after being treated with the AI-selected medication, he is now two years into a complete remission. Using AI to find existing FDA-approved medications that can be repurposed to treat other serious diseases is a major opportunity to improve care without the years of delay involved in clinical trials needed to prove the safety and efficacy of new drug.

Adalimumab is a monoclonal antibody that blocks certain inflammatory proteins in the body.

That's a wrap! More news next week.