- AI Weekly Wrap-Up

- Posts

- New Post 6-25-2025

New Post 6-25-2025

Top Story

Anthropic gets split decision in copyright suit

AI startup Anthropic, creator of the highly-regarded Claude chatbot, had a big day in court yesterday. A federal judge in San Francisco handed down 2 rulings in a copyright infringement lawsuit against the company. In his first ruling, the judge awarded Anthropic a landmark victory, deciding that using copyrighted materials for AI training was inherently “fair use”, (and thus allowed) because the resulting AI models did not just regurgitate the ingested works, but transformed them when giving answers to queries. This ruling has been the goal of AI companies ever since the first copyright infringement lawsuits were filed in early 2023. AI models get much of their power from being trained on vast amounts of data, most of which has been scraped from the internet, including copyrighted works. Nobody cared until the chatbots started making big bucks, none of which flowed to the original owners of the copyrights. Then the lawsuits started, the most famous being the pugnacious, take-no-prisoners lawsuit filed by the New York Times against OpenAI, creator of ChatGPT. (The lawsuit is famous mostly because the NYT has plastered its own view of the case all over its pages.) OpenAI and other AI model makers have argued that AI training is fair use, and now the judge in the Anthropic case has affirmed that view. But wait - the judge had more to say. In his second ruling, the judge allowed the case against Anthropic to proceed to trial because they had knowingly used pirated copies of 7 million copyrighted works in the original training. Fines for piracy can be assessed at $150,00 per incident, which in this case would add up to over $1 trillion in damages. Even for AI companies, a trillion dollars is an unpayable sum that would bankrupt the company. So, the battle over copyright continues for now, with no clear winner, but perhaps some indication of how the ground rules for AI training get set in the future.

Federal judge hands Anthropic a win on fair use, a loss on piracy in copyright case.

Clash of the Titans

SoftBank pitches $1 trillion AI/Robotics hub in Arizona

Masayoshi Son has never been known for his humility. Last week the news broke that Son, the CEO of SoftBank, the world’s largest technology venture capital fund, is now pitching the establishment of a $1 trillion hub for robotics and AI in Arizona. Son is apparently seeking to team up with the world’s leading AI chip fabrication company Taiwan Semiconductor Manufacturing Company (TSMC). TSMC has already poured billions of dollars into building a state of the art chip fabrication plant in Arizona, with plans to eventually invest as much as $165 billion. Son seemingly wants to leverage that commitment by TSMC into a larger project to build an AI/Robotics manufacturing hub modeled on China’s technology/finance/manufacturing center in the city of Shenzen. To pull this off, Son needs both investment partners, as well as federal and state support to ease permitting issues and offer juicy tax breaks.

TSMC’s chipmaking plant in Arizona will grow substantially, with or without SoftBank CEO Son’s help.

Microsoft to put on-device AI into Settings on Copilot+ PC’s

Leaving the headline-grabbing Large Language Models like ChatGPT to its partner OpenAI, Microsoft has focused on building smaller but still capable models that can run on a user’s individual device. We are now seeing one of the first fruits of that approach, with Microsoft announcing a new Small Language Model, Mu, with only 330 million parameters, as opposed to GPT-4’s 1.8 trillion (with a “T”), which is 6,000 times larger. The small but mighty Mu AI model will run completely in the NPU (Neural Processing Unit, basically a GPU but supposedly optimized for AI) of Microsoft’s next-gen Copilot+ personal computers, freeing up the CPU. The on-board Mu AI model will power the Copilot+ PC’s Settings agent, which will allow users to tweak their machine’s settings with natural language requests, instead of clicking through confusing menus of endless cascading checkboxes. Regardless of the success (or not) of the Mu model or the Copilot+ architecture, this is clearly the future of human-machine interfaces - helpful AI agents that hide the complexity of hardware and software behind a chat interface that speaks your language.

Microsoft announces a small but mighty AI inside your next PC.

NATO’s new 5% of GDP target will boost defense-tech AI in Europe

Pushed hard by Donald Trump’s demand for a boost to their defense spending, NATO allies agreed today to a historic 5% of GDP goal (current expenditures are around 2% of GDP.) Analysts predict that a large amount of the massive new expenditures will pour into AI and robotics companies. Although US defense-tech companies like Palantir and Anduril will likely benefit, many observers expect EU countries to try to jump-start their own tech sectors by nurturing local firms, in pursuit of what is being called “technological sovereignty.” EU nations have grown weary of the cultural dominance of the US that is spread by our technological dominance in social media, search, etc. EU firms that are likely to benefit from the oncoming gusher of defense spending include Germany’s Helsing (maker of autonomous attack drones) and France’s Preligens (purveyor of AI solutions for defense and intelligence applications.)

Preligens’ founders Arnaud Guerin and Renaud Allioux are nerds, but cooler, cuz they’re French.

Fun News

Karpathy says AI is the third major revolution in software

AI folk hero Andrej Karpathy, who led the initial AI efforts of both Tesla and OpenAI, recently gave a lecture to young startup founders at YCombinator. His talk was a magisterial overview of the past, present, and future of software. His major points included:

We are still in the early days of the AI revolution

The current chat interface is clunky, and won’t last

The next phase of AI is agents - intelligent assistants that semi-autonomously perform tasks that you delegate to them

No more need for most people to learn to code software - AI responds to natural languages such as English

In the future, most websites will be primarily designed for use by AI agents, not for humans

Solo entrepreneur’s startup bought out for $80 million in just 6 months

Last week no-code website development company Wix announced that they had purchased a 6-month-old AI software coding app known as Base44 for $80 million in cash. The founder and sole owner of Base44 is Israeli software developer Maor Shlomo. He initially began developing his “vibe coding” app alone as a fun side project, documenting his progress online on LinkedIn and X/Twitter. His number of online followers expanded rapidly, and once he had a working model, so did his customer base, reaching 250,000 active users by last month. Although Shlomo had to hire 8 employees and cover high cloud computing costs to manage this growth, the company was highly profitable, generating $189,000 in profit in May.

Although Shlomo accomplished an incredible feat with his lightning-fast development and sale of Base44, he did start out with some advantages. He was already well known in Israeli tech circles from a previous, more conventionally successful startup, giving him access to potential employees and to seed capital. His genius move of developing in public struck a nerve with the tech community, and so he never had to worry about marketing, because his burgeoning community of followers gave him remarkable word-of-mouth visibility. Still, his story makes the hype that AI can help solo entrepreneurs develop major companies seem a little more real.

Solo entrepreneur Maor Shlomo has 80 million reasons to smile.

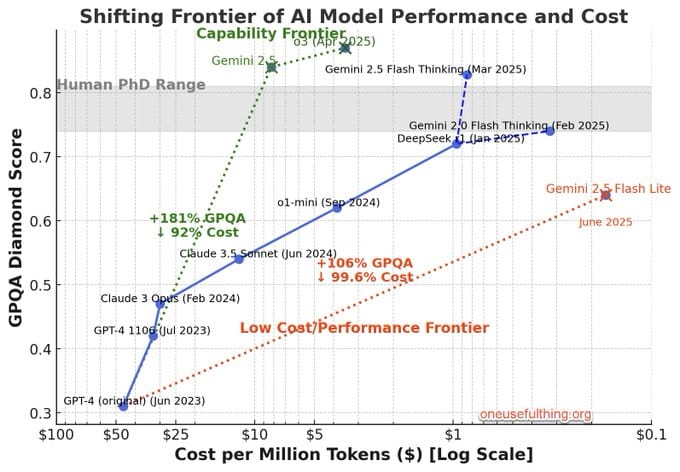

Top AI models are now smarter than PhD’s

The Graduate-level, Google-Proof Question and Answer benchmark for AI models is just what its name suggests. It is a battery of highly challenging questions requiring reasoning and problem solving, mainly in STEM fields. The problems are supposedly “Google-Proof” because the answers cannot be readily found just by a search. Human experts with graduate degrees tend to answer only 75%-82% of the questions correctly. Two years ago, the most capable AI models at the time, GPT-4 from OpenAI and Gemini from Google, scored only around 32%. However, in March/April of this year, Google’s Gemini 2.5 Pro model and OpenAI’s o3 model both achieved suprahuman scores, with Gemini 2.5 Pro scoring 84%, while o3 scored 87.7%. Meanwhile, the cost of using AI dropped 10-fold over the 2 years. Experts predict that AI models will continue to get smarter ands cheaper for the foreseeable future. “Intelligence too cheap to meter” may become more than hype some day.

AI performance on the GPQA benchmark of complex questions surpassed humans this Spring.

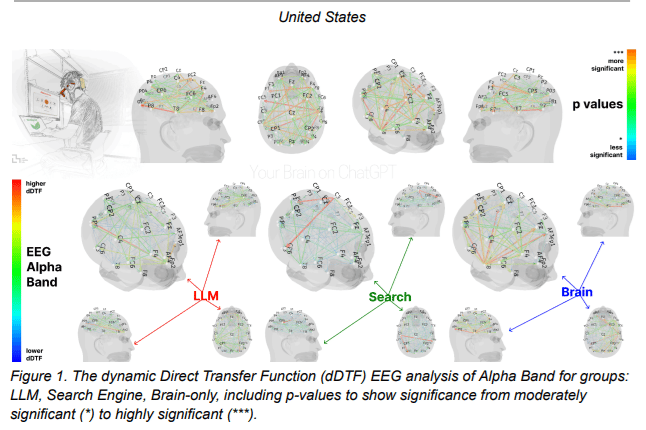

Does ChatGPT rot your brain?

MIT researchers have released a study, comparing the cognitive effort of students who were tasked with writing essays under 3 different experimental conditions. One group was allowed no access to online tools. A second group was allowed to use a search engine. And a third group was given access to ChatGPT. The participants’ essays were scored for originality and depth, and their mental effort was measured with an EEG. The researchers found that the Brain-only group had the most original essays and the most mental effort. The Search Engine group was intermediate on both originality and effort, and the ChatGPT group was lowest on both measures. The researchers interpreted these findings as demonstrating that use of AI causes students to accumulate “cognitive debt”, and to not learn much from their task of writing essays - in fact, some of these students couldn’t remember what “they” wrote just minutes later. However, critics of the study have pointed out that a closer look at the data indicated that those who actually engaged with the task of writing did well on both measures regardless of the tool they had used. It’s just that most students used the online tools to perform most of the cognitive work for them and so learned little. Those without access to tools did not have that option, and were forced to learn. This squares with other studies of AI as tutors, in which students who engaged actively with an AI in order to learn a topic often learn far more and far faster than students in conventional classrooms. AI will almost inevitably be used by students. The important question (IMO) is not whether, but how they will use it.

MIT researchers used EEGs to look at brain activation patterns in students writing essays.

Robots

China unveils mosquito-sized military drones

China’s National University of Defense Technology has announced the development a mosquito-sized drone to be used for surveillance and reconnaissance in “sensitive” environments. The tiny spy-bots apparently have flapping wings and miniature legs to allow them to navigate to a target in complex environments. The US is developing similar microbots for a wide range of applications, civilian and military.

Unsurprisingly, the Chinese military refused to share pictures of their latest micro-drones. Here's one artist’s conception.

UK researchers develop “pipebots” to find and repair water leaks

University of Sheffield researchers are developing miniature “pipebots” to inspect and even repair leaky underground water pipes. The UK’s aging water systems date back to the Victorian era, and now include some 215,00 miles of underground pipes. Finding and repairing leaks is a difficult and costly endeavor, costing over 4 billion pounds per year, or some $5.4 billion USD, and generally involves digging up roads somewhat blindly to find the section that is leaking. The pipebots are about the size of a toy car, and can navigate the underground water pipes semi-autonomously to discover the source of the leak. Once localized, the leak can be repaired with targeted digging to minimize disruption of traffic and of water service.

UK researchers are developing semi-autonomous pipebots to detect water leaks underground.

AI in Medicine

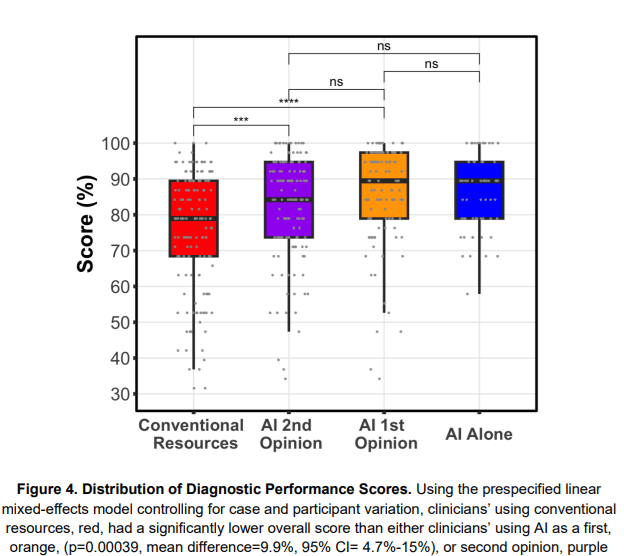

Stanford study shows AI improves physicians’ diagnostic accuracy

A study at Stanford University compared the diagnostic accuracy of 70 physicians on challenging clinical reasoning vignettes, with and without access to a custom AI chatbot. The study found that physician accuracy scores improved from about 75% with conventional tools (such as UpToDate), to about 85% when using AI, a finding with a p-value = 0.00001. This means that the result has such a high statistical significance that there is only 1 chance in 100,000 that the chatbot did not improve the physicians’ performance. (Just as an aside, the AI alone scored 90% accuracy, suggesting that perhaps the role of the human physicians was primarily to confuse the chatbot, but this result was not statistically significant.) The authors argue that AI should be welcomed into clinical practice as a teammate, not just a tool.

Sword Health offers AI-enabled physical therapy, mental health

Sword Health has been a fast-growing digital health company, offering AI-enabled physical therapy with online sessions tracked with motion sensors. The company has recently raised $40 million at a valuation of $4 billion. Concurrently, Sword has announced that it is rolling out a new AI-enabled mental health care offering that includes a personalized AI Care Agent, a wearable that tracks physiologic data that may indicate depression or anxiety, and online remote human mental health professionals. It remains to be seen if Sword can replicate its success in digital physical therapy in the fraught arena of mental health care.

Sword Health offers online physical therapy powered by AI and motion sensors.

That's a wrap! More news next week.