- AI Weekly Wrap-Up

- Posts

- New Post 7-17-2024

New Post 7-17-2024

Top Story

Claude-mania builds over new Artifacts

Claude, the chatbot from AI startup Anthropic, has long played second fiddle to category-leader ChatGPT from OpenAI. But now, with its latest update known as Claude 3.5 Sonnet, Claude is having “A Moment.” The key new feature in the new Claude is called Artifacts, which is an interactive window that allows the user to create computer applications on the fly with natural language prompts, and see a working application running in real time. Although the technology is not groundbreaking, the user interface is. Suddenly, ordinary, nontechnical users are able to create working applications and see them run instantly. The instant feedback allows rapid improvements, and many users report having created games or websites in minutes, with no prior coding experience. I created a tic-tac-toe game and an educational flash card game, both in less than 5 minutes. Only “Pro” (paying) users of Claude can create Artifacts, but they can be “published” so that all Claude users, paid or free, can use them, and remix or modify them for a personalized version. You can get a free account with Claude (at claude.ai) and join the fun. A link to some demos of Artifacts created by users is below, followed by a link to more in-depth instructions on how to create and use them.

Clash of the Titans

OpenAI remains AI’s drama queen: The good, the bad, and the just plain weird

OpenAI continues to be AI’s attention hog, making headlines for all the right and wrong reasons. This week, the company was the subject of not one, but three major stories - one good, one bad, and one just weird.

The Good: Reuters reported on Monday that OpenAI is working on a new “reasoning engine” codenamed Strawberry. Strawberry-infused AI will be able to solve complicated math problems (it has apparently already scored 90% on a benchmark of championship math problems), make complex plans, and execute them. All of these abilities are far beyond what current models can do. Strawberry is reported to be the current iteration of Q*, the breakthrough reasoning project that spooked the OpenAI Board into firing CEO Sam Altman (before he organized an employee walkout and fired the Board. Like I say - drama.)

The Bad: A group of anonymous whistleblowers, presumed to be OpenAI employees, sent an open letter to the SEC alleging that OpenAI’s employment contracts illegally coerced employees to refrain from raising concerns about the company’s safety practices with federal regulators. Copies of this letter were also sent to members of Congress. It’s hard to believe that OpenAI’s lawyers would allow them to create employment contracts that were blatantly illegal, but it’s not hard to believe that OpenAI may have pushed the envelope of legality a bit. The real issue here is that OpenAI continues to have problems pivoting from an idealistic nonprofit AI research organization to a multibillion-dollar for profit company. A number of employees who joined the former, are none too happy finding themselves now in the latter, and they are pushing back.

The Weird: Forbesreports that OpenAI has developed a 5-stage roadmap of the path ahead to create AGI (Artificial General Intelligence, i.e. AI as intelligent as human beings.) The levels are 1) conversational AI, like ChatGPT, which is available currently, 2) reasoning AI, like the “Strawberry” model described above, 3) autonomous AI, which can act as “agents,” developing and executing complex plans, 4) innovative AI, which can create novel concepts independently, and 5) organizational AI, which can perform all the functions of an entire organization of humans. AGI is the Holy Grail of the original founders of OpenAI, and exerts a weird, quasi-religious hold over many of its top employees. AI will no doubt continue to increase in capability in the future, but the chances that this development will neatly follow OpenAI’s earnest little 5-step plan seems low, IMHO.

“AI superintelligence is gonna be lit, dude.”

Google’s robot brain

Google’s DeepMind AI research arm has just released a paper as well as a demo video of how it is using its Gemini 1.5 AI model to give robots superior spatial navigation abilities in cluttered environments, as well as allow users to interact with the robot and give it commands in natural language.

World Economic Forum touts 9 ways AI battles climate change

The World Economic Forum (you know, the folks who put on the annual Davos Forum, a sort of TED Talks for the global economic elite) have released a paper outlining 9 ways that AI is currently helping countries combat the climate change. The uses of AII range from tracking how fast glaciers are melting to replanting forests with drones.

Fun News

AI can predict your political beliefs from a picture of your face

AI-powered facial recognition technology is increasingly used by law enforcement and in high-security settings. A recent paper in the journal American Psychologist describes how an AI model can use facial recognition technology to predict a person’s political beliefs from a photograph. The model’s accuracy was relatively low, with a correlation of .22 (perfect accuracy would give a correlation of 1.00), but was distinctly better than chance. Since facial recognition technology is improving rapidly, it seems likely that the accuracy of AI predictions of political beliefs, sexual orientation, and other private information will only grow. This poses important questions about how to protect privacy in the age of AI.

GPT-4 can change conspiracy beliefs

Conspiracy theorists are notoriously difficult to persuade to give up their conspiracy beliefs. However, researchers have found that using GPT-4 to engage conspiracy theorists in a fact-based dialogue about their beliefs caused participants to reduce their beliefs in the conspiracy by about 20%, a result which was sustained over a retest 2 months later, and which spilled over to reduce their beliefs in other conspiracies. AI chatbots seem to be extremely good at persuasion, making any use of them for disinformation troubling.

Enterprise adoption of AI is mostly employees using their personal ChatGPT account at work

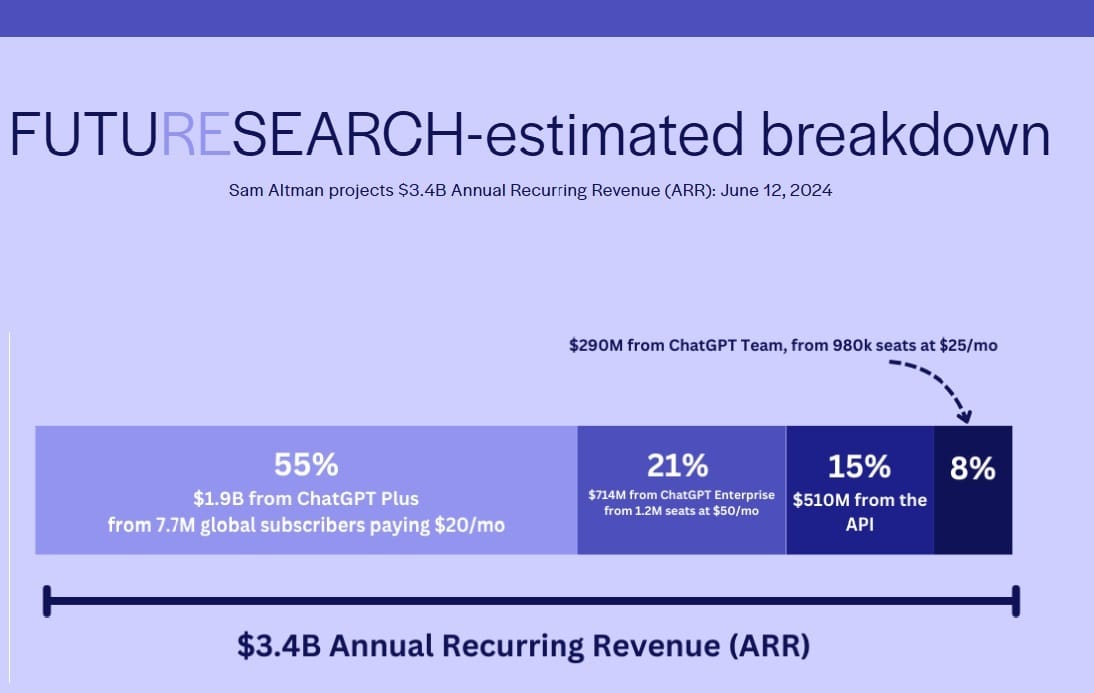

AI-powered research firm FutureSearch has released a report on the projected revenue for OpenAI. The report concludes that personal subscriptions will still make up the bulk of revenue, and by far the majority of users. Enterprise revenues and users lag well behind. Other research has shown that a substantial percentage of users who pay for the “Pro” version of ChatGPT use it at work, with or without the approval of their employer. This leads to the conclusion that “stealth” uses of AI at work likely far outweighs official use.

OpenAI projections still see personal subscriptions making up the bulk of revenue.

AI-powered “vampire drone” recharges on powerlines

Scientists at the University of Southern Denmark have developed a “vampire drone” that hooks onto power lines and recharges its batteries through magnetic induction from the line. The drone will autonomously search for the nearest power line once its battery charge drops below a set level, and then hook onto the line with special grippers. The drone is being developed for power line inspections, but a wide range of other applications could use this technology for extended autonomous operation.

AI in Medicine

PSP robbed US Rep Wexton of her voice, AI gave it back

US Representative Jennifer Wexton was struck in the prime of her life with an incurable neurodegenerative disease, Progressive Supranuclear Palsy, or PSP. She became increasingly unable to speak, but recent advances in AI voice technology have allowed her to create an AI voice clone that closely mimics her pre-disease speech. She has now announced this advance online, and recounted how liberating it is for her to speak in her own voice again. The negative applications of voice cloning technology have captured most of the headlines, but Rep Wexler is an example of using AI for a good purpose.

US Representative Jennifer Wexler now speaks via an AI voice clone.

AI can estimate gestational age from blind ultrasound sweeps

Researchers at the University of North Carolina and in Zambia have developed an AI image analysis system that can accurately predict a fetus’ gestational age from “blind sweeps” (no real time image during the exam) of the mother’s abdomen with an ultrasound wielded by untrained users in resource-poor settings, using low cost devices. Part of the promise of AI in medicine is that it will extend first-world care to the poorer regions of the globe, and in resource-rich countries, bring diagnostic tests out of the hospital and into the primary care physician’s office.

AMA identifies AI virtual scribes as one of the most effective strategies against MD burnout

The American Medical Association has released the results of a massive poll of physicians on burnout, finding that 6 medical specialties (Emergency Medicine, Internal Medicine, OBGYN, Family Medicine, Pediatrics, and Hospital Medicine) had extremely high rates of burnout, ranging from 44% to 56% of all physicians in the specialty. The AMA identified the 2 most effective interventions to alleviate burnout: creating a dedicated Wellness Committee, and alleviating administrative burdens, such as by providing AI scribes to reduce the drudgery of entering visit notes into the electronic medical record.

That's a wrap! More news next week.